Pi4 Microk8s Cluster

Raspberry Pi Micro Kubernetes Cluster

Raspberry Pis are lots of fun. Here is another example - I've decided to make small Kubernetes cluster with my Raspberry Pi4s. Here is how it started.

When Pi4 came out first I've got two: 2GB version and 4GB version. 4GB version was supposed to be my Raspberry Pi (Linux) desktop computer while 2GB version was supposted to go in next robot - like this one. Unfortunately due to various circumstances, 2GB version stayed at shelf until I passed it to my son to host his Java based Discord Bots on it (as replacement to his old Orange Pi - Orange Pi had more memory to start with).

And then Pi4 with 8GB came and shifted all one step down: 8GB version become full desktop with 64bit Raspberry Pi OS, and 4GB was 'demoted' to potential server. And then the idea occurred to me:

Why not make small cluster of Raspberry Pis!

That would achieve several different things at the same time:

- Promote Raspberry Pi

- Learn about Kubernetes

- Move all our 'server side' services to one, manageable place

- Move all our 'server side' into DMZ

And by 'server side' services I mean - Inbound SMTP and IMAP servers (*) - Discord Bots - File server - Local Pi Camera aggregator web server - Why not adding home automation data collection (ingestion) into some small local data lake for further analysis?

(*) some 14-15 years ago I decided it is easier to make Java implementation of (inbound) IMAP and IMAP servers (on my Spring based app server) in Java than learn how to configure existing software to do the same.

Note: This blog post is not 'how to' recipe - more like (not complete) recording of the journey I've been through. And reminder if I need to do it again!

Hardware

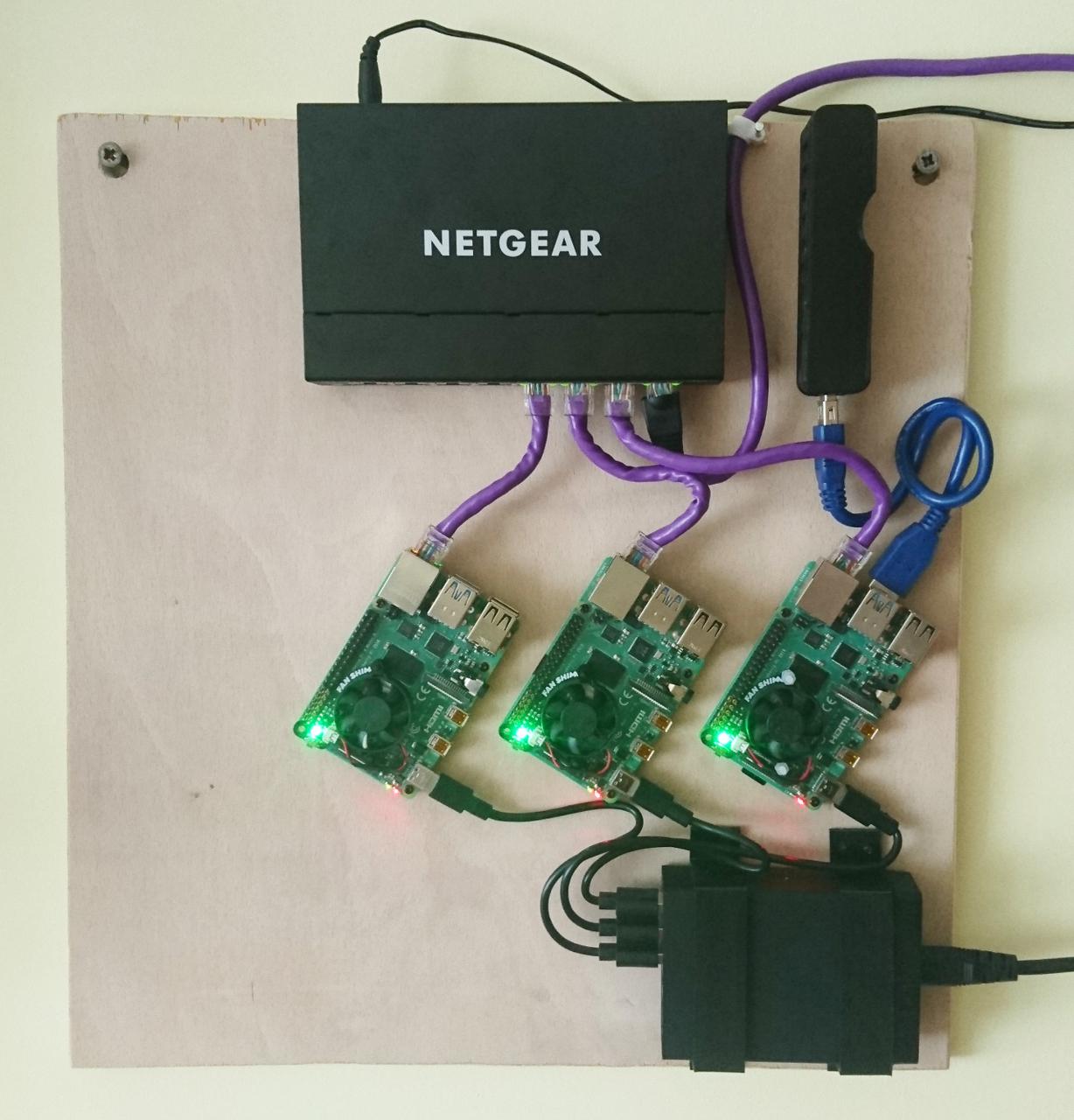

Since already have 2GB and 4GB versions of Pi4, adding another 4GB version would make a nice master/workers setup.

So, it ended up with:

| 1 x Raspberry Pi 4 with 2GB | ~ £34.00 |

| 2 x Raspberry Pi 4 with 4GB | ~ £104.00 |

| 3 x Fanshim | ~ £30 |

| 1 x Intel 660p 512GB M.2 NVMe SSD | ~ £70.00 |

| 1 x USB M.2 Enclosure M-Key | (ebay) ~ £14.00 |

| 1 x Short USB3 Type A cable | (ebay) ~ £ 4.50 |

| 1 x Anker Powerport 60W 6-Port USB Charger | (ebay) ~ £19.00 |

| 5 x 20cm USBC to USBA power cable | (ebay) ~ £9.00 |

| 1 x NETGEAR GS308 8 Port Smart Switch | ~ £28.00 |

| Total: | ~ £315 |

-

SSD and enclosure I already had from my desktop pi (I've got another, 1TB for 8GB Pi4 version), short network cables I taught muy son how to crimp himself, 2GB and 4GB Raspberry Pi 4s were already at hand and power supply, switch and another Raspberry Pi 4 were that expensive on their own.

Plus, we had offcut of 12mm plywood in the shed - just asking for DIY projects.

Constraints

I've put several constraints on myself - for no particular reason but because it seemed reasonable.

- It should be on Raspberry Pi OS (why not - why not just promote Raspberry Pi in every sense)

- I shouldn't pay for 3 SD cards if I can boot of SSD and network (*)

- Master node should act as docker repository, maybe some other repos and a file server dedicated for the cluster

- No extra DHCPs should be installed (if it can be avoided)

- No per service changes on local router should be expected (except port forwarding and/or maybe local lan DNS) (**)

(*) SSD in the worse case scenario has throughput of 2000Mbit/s, gigabit ethernet has maximum of 1000Mbit/s, ordinary SD cards around 200-500Mbit/s (I know there are 1000Mbit/s or better SDs, too) - so, reasoning is that if everything is on network, there'll be contention of around 1/3 of 1 Gbit/s - which is still in range of most SD cards anyway.

(**) Nice to have is for master to proxy all network so services are seen on static IP or domain name, so no need for one to know which particular worker node service is running. Even nice would be for same constraint to say but handing of where is which service to be in router and all of that managed/orchestrated by the cluster itself.

Software

There are a few options how to install Kubernetes to Raspberry Pi and Microk8s seemed the simplest: it is installed by snap and there are virtually no other need for any configuration and/or setup.

But, it works on 64bit OS only and most (if not all) online how-tos are deployed on 64bit Ubuntu or similar, but as I stated before - since 64bit Raspberry Pi OS is available (even though it is in beta at the time of this blog post) - so why not!

NFS, TFT and PXE (network) booting

First fail in all the setup was booting of SSD. JMicron's chipset (152d:0562) and Raspberry Pi booting didn't

like each other and in order to move on I've just brushed it aside. Some old, semi broken SD which can hold vfat boot

partition was enough to boot the rest from SSD. Not a big deal. JMicron chipset seemed like it needed

quirk mode set

and at the end provided with 2000Mbit/s throughput which was acceptable.

TFTP

Setting up TFT was easier - there's tftpd-hpa package already in the repo and by default configuration it already points

to /srv/tftp.

sudo apt install tftpd-hpa

Only thing I've changed in /etc/default/tftpd-hpa was to add --verbose (really good for debugging) in `TFTP_OPTIONS':

TFTP_USERNAME="tftp" TFTP_DIRECTORY="/srv/tftp" TFTP_ADDRESS="0.0.0.0:69" TFTP_OPTIONS="--secure --verbose"

Main issue with tftpd-hpa was the service being brought up at the start up. It has an entry in /etc/init.d/tftpd-hpa and

no matter what I tried (as explained here) it just

didn't work (probably my fault) until I caved in and did the dirtiest solution and added sleep 10s to /etc/init.d/tftpd-hpa

in do_start() function just before:

start-stop-daemon --start --quiet --oknodo --exec $DAEMON -- \ --listen --user $TFTP_USERNAME --address $TFTP_ADDRESS \ $TFTP_OPTIONS $TFTP_DIRECTORY

Also, as per Raspberry Pi Network Boot Documentation

and Pi 4 Bootloader Configuration

I've created directories under /srv/tftp/ with mac addresses of workers (which I have previously, just for a good measure and easier debug,

assigned to static IPs on local router). For instance: /srv/tftp/dc-a6-32-09-21-c1. Warning: somewhere in documentation

it showed that directory with mac address name should have upper case letters, but that was wrong.

Those directories would populated with content of /boot partition of 64bit Raspberry Pi OS image. Only change

needed there was in cmdline.txt (as per above documentations):

console=serial0,115200 console=tty1 root=/dev/nfs nfsroot=<master-node-IP>:/srv/nfs/cluster-w<worker-number>,vers=3 rw ip=dhcp rootwait cgroup_enable=memory cgroup_memory=1 elevator=deadline

That way I can have as many worker nodes booting separately from the same server.

NFS

Luckily there's nfs-kernel-server package:

sudo apt install nfs-kernel-server

which needs /etc/exports updated. I've added following:

/srv/k8s/volumes 192.168.4.0/22(rw,sync,no_subtree_check,no_root_squash)

srv/nfs/cluster-w2

/srv/tftp/dc-a6-32-09-21-c1

Also, as you can see from the paths, I've created /srv/tfpt/cluster-w<worker number> directories where I copied root

partition from OS image:

rsync -xa --progress --exclude /boot / /srv/nfs/cluster-w2

From there I had to update /srv/nfs/cluster-w2/etc/fstab to something like:

proc /proc proc defaults 0 0 <master-node-ip>:/srv/tftp/dc-a6-32-09-21-c1 /boot nfs defaults,vers=3 0 0

and update /srv/nfs/cluster-w2/etc/hostname accordingly. Cannot remember if there was anything else

needed at this point.

PXE boot and firmware EEPROM update

As per Pi 4 Bootloader Configuration,

I've created separate SD card to configure existing Pis for network booting and followed their instructions.

The only major difference was that I decided not to use DHCP server (yet, another one inside of network that already

has one) but TFTP_IP config parameter. So I've amended bootconf.txt to look like this:

[all] BOOT_UART=0 WAKE_ON_GPIO=1 POWER_OFF_ON_HALT=0 DHCP_TIMEOUT=45000 DHCP_REQ_TIMEOUT=4000 TFTP_FILE_TIMEOUT=30000 ENABLE_SELF_UPDATE=1 DISABLE_HDMI=0 BOOT_ORDER=0xf41 SD_BOOT_MAX_RETRIES=1 USB_MSD_BOOT_MAX_RETRIES=1 TFTP_IP=<master-node-ip> TFTP_PREFIX=2

and that was enough for worker nodes to boot off master's TFTP and NFS. Of course I had to repeat the process with booting

worker nodes with SD card, updating EEPROM before they booted off the server. Enabling --verbose to tftpd-hpa was

helpful - each time I didn't do something right I was able check how far it has gone...

Micro Kubernetes

As I originally said - it was supposed to be zero conf, easy to install. So:

sudo apt install snapd sudo snap install microk8s --classic

Next to microk8s I've installed docker and added pi user to both docker and microk8s groups:

apt install docker docker.io sudo usermod -aG docker pi sudo usermod -aG microk8s pi sudo chown -f -R pi ~/.kube

On all three Raspberry Pis (master and workers) I've got Microk8s running. But, as

separate, stand-alone node. Adding nodes to master (control plane) is really easy with microk8s too:

microk8s add-node

on master node and as per output of above instruction microk8s join xxx on workers.

Also, I've got down some gotchas as well. The worse was when I tried to exclude master node from scheduling and left cluster from it:

microk8s leave

from master node. Strangely enough - all continued to work well but logs were spammed with errros. See here.

To avoid master being used as node in the cluster (as it has only 2GB memory and is meant for other things) I've dropped down to only cordon it:

kubectl cordon <master node name>

(master node name was retrieved with kubectl get nodes)

But, that's not enough. containerd was failing due to some issues with snapshotting. After more research I've

got to two conclusion:

-

containerdneeds snapshot being set tonative - kernel must support cgroup's cpu.cfs_period_us and cpu.cfs_quota_us

First is easy to be fixed. In /var/snap/microk8s/current/args/containerd-template.toml I've changed

snapshotter = "${SNAPSHOTTER}"

to

snapshotter = "native"

As for second problem...

64bit Raspberry Pi OS Kernel Support for CPU CFS_BANDWIDTH

I've discovered that original kernel doesn't have CONFIG_CFS_BANDWIDTH set. Error was:

Error: failed to create containerd task: OCI runtime create failed: container_linux.go:349: starting container process caused "process_linux.go:449: container init caused \"process_linux.go:415: setting cgroup config for procHooks process caused \\\"failed to write \\\\\\\"100000\\\\\\\" to \\\\\\\"/sys/fs/cgroup/cpu,cpuacct/kubepods/besteffort/pod0eddb508-c21d-4e81-a744-2c37a7f0e4e1/nginx/cpu.cfs_period_us\\\\\\\": open /sys/fs/cgroup/cpu,cpuacct/kubepods/besteffort/pod0eddb508-c21d-4e81-a744-2c37a7f0e4e1/nginx/cpu.cfs_period_us: permission denied\\\"\"": unknown

This issue helped me to understand what is needed - CONFIG_CFS_BANDWIDTH=y in kernel config.

So, next was to recompile kernel so Kubernetes can work. I've followed original Pi documentation for [Kernel building] (https://www.raspberrypi.org/documentation/linux/kernel/building.md) and got it relatively painlessly done on my desktop pi (and master node - whichever is more convenient).

Things I've learned in the process:

- run

make bcm2711_defconfigbefore adding (uncommenting)CONFIG_CFS_BANDWIDTH=yin.configfile - use

ARCH=arm64 make -j4 Image.gz modules dtbsfor make instruction (seeARCH=arm64) - result is in

./arch/arm64. For instance./arch/arm64/boot/Image(64bit kernel image mustn't be compressed).

Installing kernel was as simple as copying ./arch/arm64/boot/Image to /boot/kernel8.img (and on my SD card on

master node) and /srv/tftp/<mac-address>/kernel8.img, copying overlays to /boot/ and /srv/tftp/<mac-address>/ as per

above documentation and copying modules. I've installed them copying whole built kernel source and result to master

using sudo make modules_install and then copied them from /lib/modules/<new modules dir>-v8+ to /srv/nfs/cluster-w<worker-no>/lib/modules/.

I'm sure there are easier ways to do all of it. But in general - just installed newly built kernel to master node and all workers (by copying appropriate files to local SSD's root FS).

After booting all again, I've got /sys/fs/cgroup/cpu,cpuacct/cpu.cfs_period_us and /sys/fs/cgroup/cpu,cpuacct/cpu.cfs_quota_us

appearing, so containerd started running properly.

Now back to microk8s config...

Microk8s, again

Microk8s provides really nice diagnostics with:

microk8s inspect

IPTABLES

One of the thing that came out of it is setting up iptables - output of the instruction is going to enough. But,

those changes never persisted on Raspberry Pi (debian based) OS. In order to make them stay (FORWARD ACCEPT) /etc/sysctl.conf

needs to have net.ipv4.ip_forward=1 uncommented.

Up to this stage I've got microk8s running and scheduling containers on worker nodes, but not master and all

log files (/var/log/syslog) on master and workers are not spammed with errors constantly.

Metal Load Balancer

Next was to sort out other constraints. First was accessing services (deployments, pods) seamlessly. After some

more research I've learnt in order to have LoadBalancer service working under Microk8s one needs to 'enable' metallb

(and here).

But, for it to work, it needs to be configured in such way that local router (Draytek 2960 in my case) knows about it

and metallb can access router's BGP (Broad Gateway Protocol), too. So, I've created metallb-conf.yaml:

apiVersion: v1 kind: ConfigMap metadata: namespace: metallb-system name: config data: config: | peers: - peer-address: 192.168.4.1 peer-asn: 100 my-asn: 200 address-pools: - name: default protocol: layer2 addresses: - 192.168.5.10-192.168.5.99

In my router I've set neighbour with ASN '200' and configured it to have its own ASN as '100'. This config

assigns range from 192.168.5.10-192.168.5.99 to metallb to use.

Dashboard

Next was to 'install' Microk8s dashboard:

microk8s enable dashboard

and added new config dashboard-lb-service.yaml to expose it locally on 'static' IP (which I associated domain name in the router):

kind: Service apiVersion: v1 metadata: namespace: kube-system name: kubernetes-dashboard-lb-service spec: ports: - name: http protocol: TCP port: 443 targetPort: 8443 selector: k8s-app: kubernetes-dashboard type: LoadBalancer loadBalancerIP: 192.168.5.10 externalTrafficPolicy: Cluster

It will expose HTTPS port on (invented IP address) 192.168.5.10 on that lan.

Storage

Enabling storage was easy:

microk8s enable storage

After that I could easily create persistent volume on nfs just by (for example):

apiVersion: v1 kind: PersistentVolume metadata: name: cameras-website-nfs spec: capacity: storage: 110Mi accessModes: - ReadWriteMany storageClassName: nfs nfs: server: <master-node-ip> path: "/srv/k8s/volumes/cameras-website"

Remeber that I had

/srv/k8s/volumes 192.168.4.0/22(rw,sync,no_subtree_check,no_root_squash)

in /etc/exports file - exposing /srv/k8s/volumes directory (and all sub directorties) to (only) worker nodes and other

machines in given IP range.

Local Registry

Microk8s has helper config for setting up docker repo on local nodes and can be easily added by:

microk8s enable registry

but, unfortunately, it uses hostPath to store persistent volume, which means it is only on local filesystem and

is removed when pod is removed. I wanted something more persistent and to know where images are stored. So, I've

copied /snap/microk8s/current/actions/registry.yaml to home dir and amended it a bit. First I've added registry-nfs-storage.yaml:

apiVersion: v1 kind: PersistentVolume metadata: name: registry-nfs spec: capacity: storage: 50Gi accessModes: - ReadWriteMany storageClassName: nfs nfs: server: <master-node-ip> path: "/srv/k8s/volumes/registry"

and amended registry.yaml:

... --- kind: PersistentVolumeClaim apiVersion: v1 metadata: name: registry-claim namespace: container-registry spec: accessModes: - ReadWriteMany volumeMode: Filesystem volumeName: registry-nfs storageClassName: nfs resources: requests: storage: 40Gi ---

(see - added volumeName and changed storageClassName). When applied both:

kubectl apply -f registry-nfs-storage.yaml kubectl apply -f registry.yaml

Now I was able to delete and re-install deployment for registry and play with all without need to push new image

to that registry again and again. BTW as registry is deployed with nodePort service it is simultaneously available

on all nodes including master node. So, to push the new docker image I've followed this:

docker build --tag test:1.0 docker images docker tag 660ab765ee5c cluster-master:32000/test:1.0 docker push cluster-master:32000/test

where cluster-master is domain name associated with my master node.

Sundry setup

You might have noticed that I never typed:

microk8s.kubectl

as many tutorials suggest. I've created ~/.bash_kubectl_autocompletion.sh file by:

microk8s.kubectl completion bash > ~/.bash_kubectl_autocompletion.sh

and added

if [ -f ~/.bash_kubectl_autocompletion.sh ]; then . ~/.bash_kubectl_autocompletion.sh fi

to the end of ~/.bashrc

After that I can use kubectl only plus I have completion after using kubectl. Most noticeably namespaces and

deployments.aps and pods are autocompleted, too.

More Gotchas And How To Fix Them

For some reason I've got many issues (mostly involving flanneld and etcd) and one particular error I fixed by

starting microk8s as root (even though user pi is in microk8s group).

sudo microk8s start

fixed

microk8s.daemon-flanneld[7605]: Error: dial tcp: lookup none on 192.168.4.1:53: no such host microk8s.daemon-flanneld[7605]: /coreos.com/network/config is not in etcd. Probably a first time run. microk8s.daemon-flanneld[7605]: Error: client: etcd cluster is unavailable or misconfigured; error #0: dial tcp: lookup none on 192.168.4.1:53: no such host microk8s.daemon-flanneld[7605]: error #0: dial tcp: lookup none on 192.168.4.1:53: no such host

error (as seen in /var/log/syslog)...

Also, at some point workers developed:

microk8s.daemon-flanneld[2697]: E0802 17:49:17.214741 2697 main.go:289] Error registering network: failed to configure interface flannel.1: failed to ensure address of interface flannel.1: link has incompatible addresses. Remove additional addresses and try again

for some reason. After another research using favourite search engine I've got to:

sudo ip link delete flannel.1

which just fixed it. flanneld

Last error I wrestled with was with containerd on one of the worker nodes complaining that it cannot remove container:

Aug 3 21:05:28 cluster-w3 microk8s.daemon-containerd[25774]: time="2020-08-03T21:05:28.176028102+01:00" level=error msg="RemoveContainer for \"24fc0a559670740d79d6a9b9f5dcef5b44444b0fbb69fc7d7fe20324fdaa7e34\" failed" error="failed to remove volatile container root directory \"/var/snap/microk8s/common/run/containerd/io.containerd.grpc.v1.cri/containers/24fc0a559670740d79d6a9b9f5dcef5b44444b0fbb69fc7d7fe20324fdaa7e34\": unlinkat /var/snap/microk8s/common/run/containe rd/io.containerd.grpc.v1.cri/containers/24fc0a559670740d79d6a9b9f5dcef5b44444b0fbb69fc7d7fe20324fdaa7e34/io/009844907/.nfs00000000018e17850000003e: device or resource busy" Aug 3 21:05:28 cluster-w3 microk8s.daemon-kubelet[459]: E0803 21:05:28.176924 459 remote_runtime.go:261] RemoveContainer "24fc0a559670740d79d6a9b9f5dcef5b44444b0fbb69fc7d7fe20324fdaa7e34" from runtime service failed: rpc error: cod e = Unknown desc = failed to remove volatile container root directory "/var/snap/microk8s/common/run/containerd/io.containerd.grpc.v1.cri/containers/24fc0a559670740d79d6a9b9f5dcef5b44444b0fbb69fc7d7fe20324fdaa7e34": unlinkat /var/snap/m icrok8s/common/run/containerd/io.containerd.grpc.v1.cri/containers/24fc0a559670740d79d6a9b9f5dcef5b44444b0fbb69fc7d7fe20324fdaa7e34/io/009844907/.nfs00000000018e17850000003e: device or resource busy Aug 3 21:05:28 cluster-w3 microk8s.daemon-kubelet[459]: E0803 21:05:28.177137 459 kuberuntime_gc.go:143] Failed to remove container "24fc0a559670740d79d6a9b9f5dcef5b44444b0fbb69fc7d7fe20324fdaa7e34": rpc error: code = Unknown desc = failed to remove volatile container root directory "/var/snap/microk8s/common/run/containerd/io.containerd.grpc.v1.cri/containers/24fc0a559670740d79d6a9b9f5dcef5b44444b0fbb69fc7d7fe20324fdaa7e34": unlinkat /var/snap/microk8s/common/ru n/containerd/io.containerd.grpc.v1.cri/containers/24fc0a559670740d79d6a9b9f5dcef5b44444b0fbb69fc7d7fe20324fdaa7e34/io/009844907/.nfs00000000018e17850000003e: device or resource busy

The rest of the cluster was fine and no issues were seen but the logs that were swamped with errors as above.

Fix for it was to temporarily stop containerd and remove offending directories. Note: there was more than one

that failed so I needed to pick them all from the logs and do:

sudo systemctl stop snap.microk8s.daemon-containerd.service # there might be more than one failing container! rm -rf /var/snap/microk8s/common/run/containerd/io.containerd.grpc.v1.cri/containers/24fc0a559670740d79d6a9b9f5dcef5b44444b0fbb69fc7d7fe20324fdaa7e34 rm -rf /var/snap/microk8s/common/run/containerd/io.containerd.grpc.v1.cri/containers/24fc0a559670740d79d6a9b9f5dcef5b44444b0fbb69fc7d7fe20324fdaa7e34 rm -rf /var/snap/microk8s/common/run/containerd/io.containerd.grpc.v1.cri/containers/24fc0a559670740d79d6a9b9f5dcef5b44444b0fbb69fc7d7fe20324fdaa7e34 sudo systemctl start snap.microk8s.daemon-containerd.service

Physical Realisation

As per my son's idea - we've mounted all on the board and hanged it on the wall:

Small USB3 extension cable is to be added to the picture. Also, as you can see there's speace for the 3rd worker and some spaces next to the switcha nd power adapter - for future expansions.

Extras - Network Configuration

As a bonus, I've decided to move whole cluster to 'dmz-lan'. I've dedicated whole port on the Draytek router for it, configured separate network (lan on 192.168.4.0/22 with 192.168.5.0/24 inside of it for 'static' IP dedicated to particular services) and set firewall filtering so my home network (lan) can access DMZ one but not otherway round. Except for carefully selected services and only when needed.

Conclusion

This exercise lasted slightly longer than a week and produced nice, small, manageable kubernetes cluster. Ideal for playtesting different solutions and such. It has scope for extension and aside of academic purpose will serve real life purpose - run various containers with services used daily.

Comments

Comments powered by Disqus